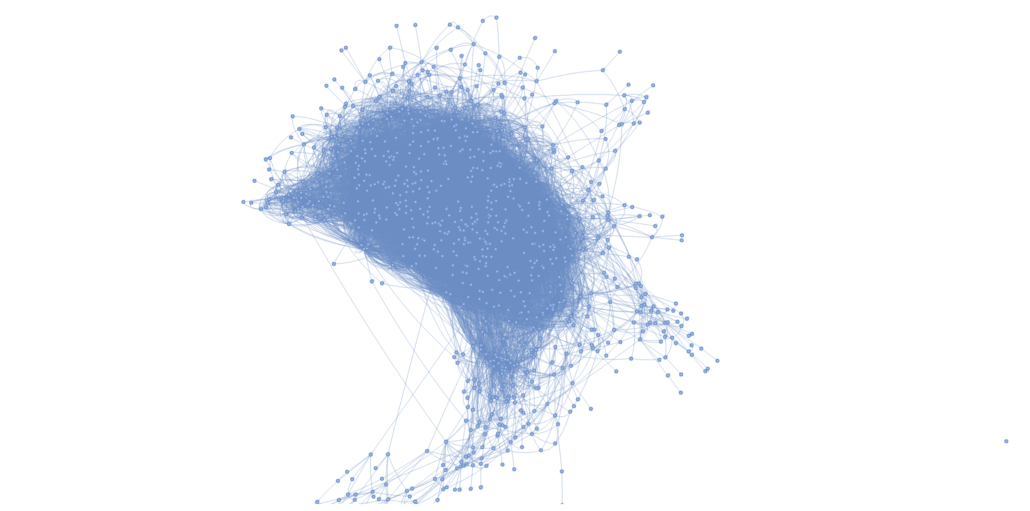

Writing Apache Spark with Rust! Spark Connect Introduced.

I never thought I would live to see the day, it’s crazy. I’m not sure who’s idea it was to make it possible to write Apache Spark with Rust, Golang, or Python … but they are all genius.

As of Apache Spark 3.4 it is now possible to use Spark Connect … a thin API client on a Spark Cluster ontop of the DataFrame API.

You can now connect backend systems and code, using Rust or Golang etc, to a Spark Server and run commands and get results remotely. Simply amazing. A new era of tools and products is going to be unleashed on us. We are no longer chained to the JVM. The walls have been broken down. The future is bright.