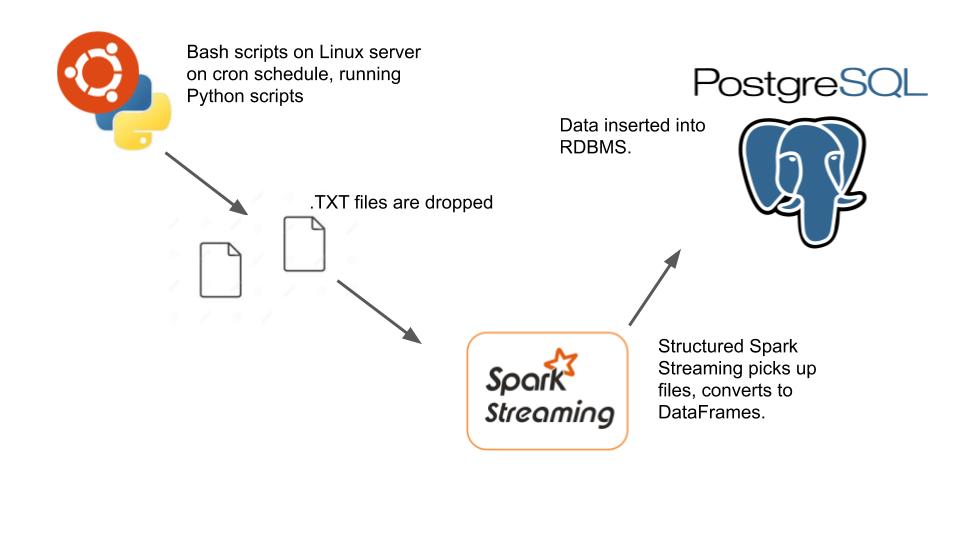

So after watching way too many end of the world movies on Netflix I decided the best way to prepare for the Zombie Apocalypse would be to give myself a way to know when the dead are about to crash through my living room window (while I’m eating popcorn watching zombies on on Netflix of course). This is one reason I love Python, I knew I would barely have to write any code to do this. I figured if I could scrape the popular news sites and do some simple sentiment analysis, get the government threat levels, some weather alerts etc, jam all this data together I would get a perfect Dooms Day clock to tell me how close we are to the end of the world on any given day. So lets begin. All the code is on GitHub. Here is visual of what I wanted.