Python and Data. Devils in the Details.

I work with Python and data a lot, specifically different RDBMS’s with structured data. Anyone who does this type of work will probably have run across pyodbc, a Python package that allows ODBC access into different

database platforms.

Today I’m looking at why it is important to understand the Python packages you work with and spend the extra time to look at the documentation. Also understanding the different functions and how they can make your code better, and faster!

Lets take a look at a table full of data and how we can extract it to a text file, which is a pretty common problem to solve.

First things first, below code will generate a table and a little over 3 million records. (This is T-SQL).

USE Your_Database CREATE TABLE dbo.TestData ( CustomerNumber INT, OrderNumber INT, Amount MONEY ) DECLARE @COUNT INT, @CustomerNumber INT, @OrderNumber INT, @Amount INT SET @COUNT = 0 WHILE @COUNT < 4800001 BEGIN SET @CustomerNumber = FLOOR(RAND()*(3154368-1+1))+1; SET @OrderNumber = FLOOR(RAND()*(3154368-1+1))+1; SET @Amount = FLOOR(RAND()*(1000-1+1))+1; INSERT INTO dbo.TestData VALUES (@CustomerNumber,@OrderNumber,@Amount) SET @COUNT = @COUNT + 1 END

Now that the data available lets take a stab. The first attempt I made included a quick search of the documentation for pyodbc. The obvious choice seemed like fetchall(), I mean that’s like a no brainer right? Fetch all my results and right them to a file, easy peasy. And the code is simple.

# 3,154,368 records, 679.477 seconds. (11 minutes) import pyodbc import os import time def sqlClass(): cnxn = pyodbc.connect('DRIVER={SQL Server};SERVER=YourServer;DATABASE=YourDatabase;UID=YourUser;PWD=Password') cursor = cnxn.cursor() return cursor def writeSlow(data): with open('C:\\results.txt','a',encoding='utf-8') as file: file.write(str(data.CustomerNumber) + ',' + str(data.OrderNumber) + ',' + str(data.Amount) + '\n') def main(): sql = sqlClass() rows = sql.execute('SELECT CustomerNumber,OrderNumber,Amount FROM dbo.TestData').fetchall() for row in rows: writeSlow(row) if __name__ == '__main__': t0 = time.time() main() t1 = time.time() total = t1-t0 print(total)

![]()

Unfortunately 11 minutes later while twiddling my thumbs….yeah this doesn’t work. What gives? Well, when i actually read the documentation on fetchall()….

“Returns a list of all the remaining rows in the query. Since this reads all rows into memory, it should not be used if there are a lot of rows. Consider iterating over the rows instead.”

Ok, makes sense, reading that much crud into memory is a bad idea. What next?

Lets try to iterate the rows like suggested. According to the docs fetchmany() sounds nice!

“fetchmany(size=cursor.arraysize) Returns a list of remaining rows, containing no more than size rows, used to process results in chunks. The list will be empty when there are no more rows”

So let’s take about 50,000 rows at at time, see what happens. Also,

why not use a generator to stream in those results for writing to the file. 14.67 seconds later, well that’s better than 11 minutes! I guess it does pay to read documentation after all.

# 3,154,368 records, 14.62 seconds. import pyodbc import os import time def sqlClass(): cnxn = pyodbc.connect('DRIVER={SQL Server};SERVER=YourServer;DATABASE=YourDatabase;UID=YourUser;PWD=Password') cursor = cnxn.cursor() return cursor def writeFile(data): with open('C:\\results.txt','a',encoding='utf-8') as file: for x in data: file.write(str(x.CustomerNumber) + ',' + str(x.OrderNumber) + ',' + str(x.Amount) + '\n') def results(sql): iter = sql.execute('SELECT CustomerNumber,OrderNumber,Amount FROM dbo.TestData') while True: rows = iter.fetchmany(50000) if not rows: break for row in rows: yield row def main(): sql = sqlClass() data = results(sql) writeFile(data) if __name__ == '__main__': t0 = time.time() main() t1 = time.time() total = t1-t0 print(total)

![]()

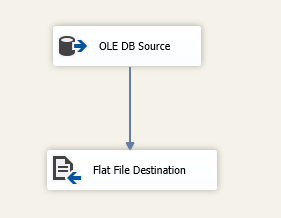

Also, just for fun i wrote a simple SSIS package to pull the data into a text file, just to see how fast that would run (locally on my machine) I setup a simple OLE DB data source to point at the table and a flat file connection to point to the text file on my desktop. Ran in about 4.641 seconds.

![]()

It bothered me that SSIS beat my Python, just out of principal. I mean I get it is SQL Server and SSIS, and by all rights it should be fast, but something about big guy vs little guy…. I guess free vs expensive…