Hadoop and Python. Peas In a Pod?

Last time I shared my experience getting a mini Hadoop cluster setup and running. Lots of configuration and attention to detail. The next step in my grand plan is to figure out how I could use Python to interact ( store and retrieve files and metadata ) with HDFS. I assumed since there are beautiful packages to install for all sorts of things, pip installing some HDFS thingy would be easy and away I would sail into the sunset. Yeah…not.

First, some background information. For Python to interact with Hadoop/HDFS we need to think about how that would happen, or what the options are.

1. Native RPC

Typically Java/Scala is used to do RPC (remote procedure calls), and that is how communication happens. This would be considered “native” way to interact with HDFS.

2. WebHDFS

Another option is HTTP. Hadoop comes with something you will see called WebHDFS. It’s basically a API used to emulate all the normal HDFS command via HTTP.

3. libhdfs

Then last but not least is libhdfs, a C language API into HDFS, this library comes with your Hadoop install.

There have been a few attempts to give Python the more native approach into HDFS (non HTTP), the main one for Python is via PyArrow using the library libhdfs mentioned above. The problem I found experimenting with this and other driver libraries like libhdfs3 is that the configuration is exact and there is no room for error, there is very little to no good documentation, and none of them worked out of the box. Even stackoverflow contained hardly any help for the configuration errors I had to work through.

I almost went with a WebHDFS option called hdfscli ( pip install hdfs ), but some articles I read talked about it’s slowness for uploading and downloading files, and after testing it,I wanted to try the more native C approach via PyArrow. In case you want to use the pip install hdfs package, here is an important note about configuration of Hadoop to allow the HTTP requests to work.

pip install hdfsHow do you enable WebHDFS for your Hadoop installation? Go to your installation directory, find hadoop/etc/hadoop and open up the file called hdfs-site.xml (on master and all worker nodes). Add the following property.

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property> Easy as that. You will have to stop and restart your Hadoop environment after this change.

Here is the simplest way to connect your Python code to WebHDFS via HTTP. I struggled for a few minutes with connection errors. Some documentation will tell you connect with hdfs://blahblahblah. This is not correct. I ended up doing two things wrong, trying to connect with something other than localhost ( I was using node-master and port 9000 ) instead of the http://localhost:50070 . So basically know your environment.

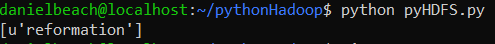

from hdfs import InsecureClient

client = InsecureClient('http://localhost:50070', user='')

def main():

directory = client.list('books')

print(directory)

if __name__ == '__main__':

main()

That was it, easy enough, now I could see a little light at the end of the tunnel, I can talk to HDFS with Python, so I feel like I’m in my happy place. And that was pretty easy to use.

But, as I mentioned earlier I decided to try the PyArrow route. After pip installing PyArrow, which comes with a HDFS library, the rest is really configuration, or more like knowing your configuration.

pip install pyarrowThe first thing you need to do is set 5 different environment variables, you must either already have them set in your Linux bash, or set them in your script, like I did.

1. JAVA_HOME – You need the JAVA_HOME path, which should be set already for use in your Hadoop installation.

2. HADOOP_HOME – Just go find your installation directory.

3. ARROW_LIBHDFS_DIR – This will be the location of a file called libhdfs.so , this is the library. It will most likely be found inside your HADOOP_HOME directory in lib/native.

4. HADOOP_CONF_DIR -There seems to be some conflicting advice as to if the Hadoop Configurations need to be added, I did, using the same path as HADOOP_HOME.

5. CLASSPATH – All the Hadoop JAR files.

CLASSPATH , the last environmental variable is the trickiest to get right, but nothing will work without it.. This is basically all the Hadoop JAR files that get passed to the JVM on startup. You can see in my below example I used glob in python to go where Hadoop stores all the JAR files (in HADOOP_HOME then share/hadoop ) and get them all into just a string where the full file paths are separated by a “:” It took me awhile to figure out this needed to be done, the Hadoop errors if you don’t do this right are evasive, and many documents online tell you that as long as HADOOP_HOME is set the system should be able to figure the rest out itself, this was not the case! The code can be found on Github for this.

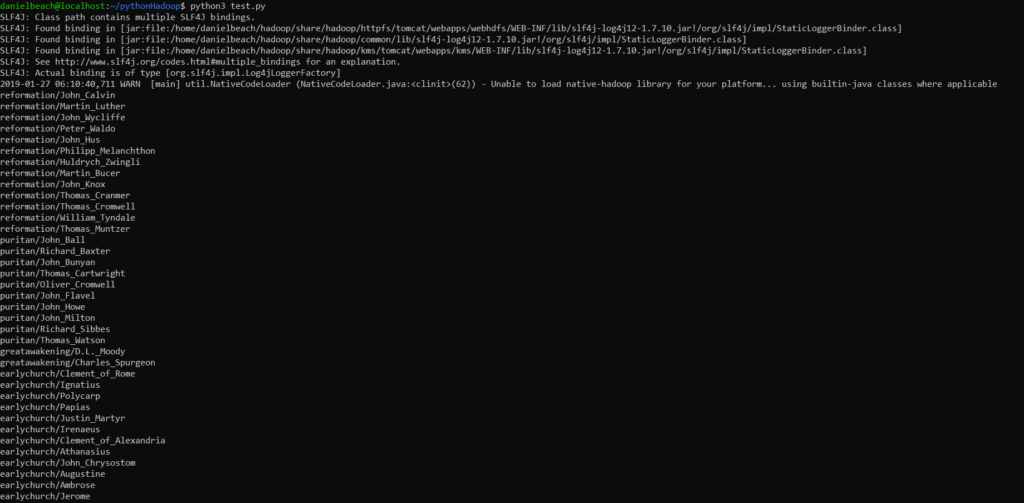

import os

import pyarrow as pa

import glob

import json

with open('directories.json') as f:

data = json.load(f)

os.environ['HADOOP_HOME'] = "/home/danielbeach/hadoop/etc/hadoop"

os.environ['JAVA_HOME'] = '/usr/lib/jvm/java-8-openjdk-amd64/jre'

os.environ['ARROW_LIBHDFS_DIR'] = '/home/danielbeach/hadoop/lib/native'

os.environ['HADOOP_CONF_DIR'] = '/home/danielbeach/hadoop/etc/hadoop'

hadoop_jars = ''

for filename in glob.glob('/home/danielbeach/hadoop/share/hadoop/**/*.jar', recursive=True):

hadoop_jars += filename + ':'

os.environ['CLASSPATH'] = hadoop_jars

hdfs = pa.hdfs.connect(host='default', port=9000, driver='libhdfs', user='danielbeach')

for k,v in data.items():

for item in v:

directory = "{}/{}".format(k, item)

if hdfs.exists(path=directory) == False:

hdfs.mkdir(path=directory)

else:

print(hdfs.ls(path=directory))This gives you a sample of how easy it to interact with HDFS via PyArrow once you can get it up and running. The above script just reads in a JSON file I have with a list of directories I wanted. It is very easy to create a HDFS object by simply calling pyarrow.hdfs.connect() and passing in the default parameters. This is where the errors will start if you’ve got bugs.

Then most of the typical HDFS functions are available, I used hdfs.exists to ensure the directory wasn’t already created then hdfs.mkdir() to create them, and hdfs.ls() to see what’s inside the directories. Super easy! Being able to use Python to interact with my Hadoop cluster is perfect because I plan on using Python to download/scrape the files I need, and being able to pass them directly into HDFS will make the data pipeline seamless for me!

Next time I will talk/show how I’m going to use Python to go about downloading/scraping the data I’m looking for from the Web and store/retrieve these files in my HDFS cluster.

Have you tried working with the cluster using Java? In my view the title is more fitting to Java and HDFS than it is to python. My main challenge is keeping library directories up to date across all of worker nodes. Sure you can easily push updates to all machines…the problem is add on library usually require specific .py versions. Updating library can break currently running code. Versus fat .jar files, where all the necessary libraries are contained in the jar itself.

I agree with you. It appears working with Python and Hadoop on the face of it should he easy, but in reality it is far from it. I’ve been disappointed with the amount of fanaggling it takes just get them talking together in a nice way, only someone with a lot of patience and time will do it. I’m planning on running Spark inside YARN and hoping PySpark will solve my problems since I really just want Spark in the first place and Hadoop just as a store. Java is too much for me, I’ve been messing with Scala just a touch, I wonder if that would make my Hadoop life easier?