Data Modeling in DeltaLake (DataBricks)

Time to open a can of worms. I’ve recently been working with DataBricks, specifically DeltaLake (which I wrote about here). DeltaLake is an amazing tool that when paired with Apache Spark, is like the juggernaut of Big Data. The old is new, the new is old. The rise of DataBricks and DeltaLake is proof of the age old need for classic Data Warehousing/Data Lakes is as strong as ever. While this Spark+DeltaLakes tech stack is amazing, it’s not your Grandma’s data warehouse, it’s fundamentally different under the hood. One of the topics I’ve been thinking about lately has been data modeling in DeltaLake (on DataBricks or not).

Data Modeling in DeltaLake (DataBricks)

So, data modeling in DeltaLake+Spark. What to do, what to do? I spent a fair amount of time Googling the subject and pretty much came up with a big fat nothing. And I guess this makes sense, data modeling is a big topic with classic data warehouse technologies using RDBMS like Oracle, Postgres, MySQL, SqlServer. Your data model pretty much would make the project sink or swim. In the past the correct data model that was usually the horse that got beaten to death.

This is the world where Kimball reigns supreme.

But that world has slowly been dying, and data modeling just doesn’t seem to get talked about that much anymore. Most people using Spark these days are more concerned about file types like Parquet, compression, file sizes, storage layers like s3 etc.

I think part of the reason for this is that Spark in general is more forgiving the classic RDBMS. It’s better and faster at Big Data, people just like to brute force stuff. They just make the cluster bigger. So the work of in depth data modeling for Spark+DeltaLake just kinda slowly faded away into the background.

Why should we bother with data modeling on Spark+DeltaLake?

Stepping back from the problem a little bit, why would we want to spend a lot of time thinking about how to model data when using DeltaLake and Spark? I can think of a few reasons, but they are more ephemeral than just for performance, in fact it really has almost nothing to do with performance.

What does data modeling help with?

- normalization (control the duplication of data)

- beyond the obvious, it just makes it confusing for future developers and others, how do they know with column to use from which dataset, what is truth?

- reduce complexity of transformations.

- a messy data model (or not even data modeling at all) will lead to messy and complex transformations …. because data is messy.

- forces the answering of business logic questions up front.

- much too often hidden requirements that break things and cause re-writes don’t come up till too late. Data modeling will show which rocks are poking up through the river.

- a good data model showing how data flows through the pipelines becomes the best documentation you’ve ever written.

- for new engineerings, or anyone, visually reviewing a data model gives you more information than reading 2 pages of documentation.

How far down the data modeling rabbit hole should you go?

Probably not as far as you think, and probably farther then you want to. Haha.

Honestly the thought of designing a data model with primary and surrogate keys in a full-blow Kimball style data warehouse running on DeltaLake+Spark gives me the willies. I’m guessing that the data requirements should probably dictate how far you take the data model.

Introducing complexity for the sake of having nothing better to do is not a good idea. Only go as far as is needed when data modeling. I think it’s great to have facts and dimensions in DeltaLake, because it helps you think about the data, the type of data, how the data is used, and forces a sort of simplicity that will make the codebase and ETL simpler as well.

Example Data Model for DeltaLake.

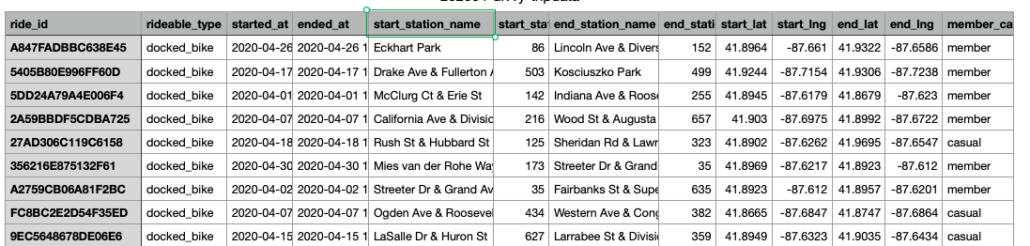

Let’s walk through an example of how not data modeling could be a bad idea in the long run, and how using a simple data modeling approach could make our lives easier. Again, we will use the free Divvy Bike Trips data set.

This is a simple example, but let’s talk about a few different approaches. Let’s say we for the sake of argument we get a few files per day, in total, together we get the following headers and data.

ride_id, rideable_type, started_at, ended_at, start_station_name, start_station_id, end_station_name, end_station_id, start_lat, start_lng, end_lat, end_lng, member_casual

Generally speaking you will have people who will follow the one of the three following approaches, or somewhere inbetween.

- put everything in one file/table.

- break data up logically.

- facts and dimensions, history tracking, go the whole 9 yards.

Problem with the first approach. (put everything in one file/table)

The problem with just dropping everything into a single file or table is that it might be the easy answer up front, but those with experience know the problems will come later on down the road. When you keep doing that approach over and over again, new data, new table, blah blah, you wake up later and realize you have data everywhere, duplicated, inconsistent, called different things, and spaghetti code to support it.

Sure, on the surface it’s easy to blow by this sort of decision and hammer out the quick code to hold and store all data in a single table/file.

from pyspark.sql import SparkSession, DataFrame, Window

import pyspark.sql.functions as F

spark = SparkSession.builder.appName('DataModeling').getOrCreate()

spark.sql("""

CREATE OR REPLACE TABLE trip_data (

ride_id STRING,

rideable_type STRING,

started_at TIMESTAMP,

ended_at TIMESTAMP,

start_station_name STRING,

start_station_id INT,

end_station_name STRING,

end_station_id INT,

start_lat REAL,

start_lng REAL,

end_lat REAL,

end_lng REAL,

member_casual STRING

)

USING DELTA

LOCATION '/data_warehouse/trips'

PARTITIONED BY (started_at);

""")

df = spark.read.csv('/tmp/202004-divvy-tripdata.csv', header='true')

df.write \

.format("delta") \

.mode("append") \

.save("/data_warehouse/trips")But as the data grows and changes over time, as more analytics are required the smash and grab approach that was once easy starts to spring leaks. New columns keep getting appended, the data itself starts to change leading to confusion. It’s basically the wild west.

If you don’t put any guard rails up on the data, don’t be surprised 2 years later when you have a mess on your hands.

Approach number 2 (break data up logically).

After spending years working on data warehousing teams, drinking the Kimball kool-aid to the exclusion of all others, and seeing the good, the band, and the ugly, this hybrid approach is probably my favorite. I like the general approach of breaking up data into its logical components, if anything it helps you understand the type of data you have, the velocity, what data is mutable and what is immutable.

Moving to the third-normal form and putting some very basic logic on-top of our data model is probably the biggest bang for you buck. It makes the data easier to deal with and understand. It forces you to think a little harder about the data you have, it’s attributes, and it’s possible future mutations.

In our simplistic example it might look something like this….

from pyspark.sql import SparkSession, DataFrame, Window

import pyspark.sql.functions as F

spark = SparkSession.builder.appName('DataModeling').getOrCreate()

spark.sql("""

CREATE OR REPLACE TABLE trip_data (

ride_id STRING,

rideable_type STRING,

started_at TIMESTAMP,

ended_at TIMESTAMP,

member_casual STRING,

start_station_id INT,

end_station_id INT,

)

USING DELTA

LOCATION '/data_warehouse/trip_rides'

PARTITIONED BY (started_at);

""")

spark.sql("""

CREATE OR REPLACE TABLE trip_locations (

start_station_name STRING,

start_station_id INT,

end_station_name STRING,

end_station_id INT,

start_lat REAL,

start_lng REAL,

end_lat REAL,

end_lng REAL,

)

USING DELTA

LOCATION '/data_warehouse/trip_locations'

PARTITIONED BY (start_station_id);

""")In our simple example the obvious thing to do is realize we have to different types of data. We have probably higher incoming volume data that tells about a unique bike trip someone took. Secondly, we have heavily repeated information that basically includes all the descriptive information about the location(s) of the bike trip(s).

When we have many hundreds of tables and datasets it can make sense to start to breaking our data up into these logical units. There is also no point in storing the names of the bike stations over and over again as well as the lat and long coordinates, when a numeric id is good enough (station_id). We can use this numeric id to retrieve the other information when needed.

Approach number 3 (Facts + Dimensions + keys).

This is the approach i’m very torn about. I’ve worked inside these types of “true” data warehouses over the years. In defense of this approach they probably provide the best overall consistency and structure and data governance. The Kimball type data warehouse with Facts and Dimensions, complete with primary and surrogate keys, history going back forever on all dimensions can be truly powerful.

But, it comes at a price. The complexity added to this type of data warehouse and pipeline is not trivial. And, I would only say it is worth it if the requirements call for this complexity.

Just like any software person can over engineer a solution, data engineers and developers can easily get caught up in trying to solve problems that don’t need to be solved.

Do you really need history tracking on every single dimension, does the business even care? These types of questions should be answered first before jumping off the deep end.

Here is what our scenario could like with our sample data. This is what would happen if you gave it a classic data warehousing developer.

from pyspark.sql import SparkSession, DataFrame, Window

import pyspark.sql.functions as F

spark = SparkSession.builder.appName('DataModeling').getOrCreate()

spark.sql("""

CREATE OR REPLACE TABLE trip_data (

ride_id STRING,

type_id INT,

started_at TIMESTAMP,

ended_at TIMESTAMP,

member_id INT,

start_station_id INT,

end_station_id INT,

)

USING DELTA

LOCATION '/data_warehouse/trip_rides'

PARTITIONED BY (started_at);

""")

spark.sql("""

CREATE OR REPLACE TABLE bike_types (

type_id INT,

rideable_type STRING

effective_start_date TIMESTAMP,

effective_end_date TIMESTAMP,

active_flag BIT

)

USING DELTA

LOCATION '/data_warehouse/bike_types'

PARTITIONED BY (rideable_type);

""")

spark.sql("""

CREATE OR REPLACE TABLE members (

member_id INT,

member_casual STRING

effective_start_date TIMESTAMP,

effective_end_date TIMESTAMP,

active_flag BIT

)

USING DELTA

LOCATION '/data_warehouse/members'

PARTITIONED BY (rideable_type);

""")

spark.sql("""

CREATE OR REPLACE TABLE trip_locations (

start_station_name STRING,

start_station_id INT,

end_station_name STRING,

end_station_id INT,

start_lat REAL,

start_lng REAL,

end_lat REAL,

end_lng REAL,

)

USING DELTA

LOCATION '/data_warehouse/trip_locations'

PARTITIONED BY (start_station_id);

""")This is overly simplified, and probably in real life it would be a little bit more complex. You tell me which one of the three scenarios would have the most complicated ETL and pipeline? I only recommend this pattern in certain orgs where the requirements call for it.

Although very powerful, I don’t think anyone should dive into the deep end without thinking it through.

Musings on Data Modeling in DeltaLake (DataBricks)

I think that data modeling in DeltaLake (DataBricks) is an interesting topic. There are probably some performance considerations that should be thought about if you are working with PB’s of data, if you are around 10TB~, I’m not sure how much time I would spend worrying about join performance.

I think the reason you would want to think about data modeling in DeltaLake is the same reason you would want to think about data modeling in any data warehouse or lake house, data mart, whatever. Just like we write reusable, logical code, we should have the same flexibility and practices with how we model our data.

I tend to think the payoff is more on the side of reducing the complexity and confusion by de-duping our data, making it fit into logical tables and models that everyone can understand and develop off of. A little bit of structure can go a long way in solving messing data issues down the road.

When I have to choose, I would choose the option number 2 above. I would drive for a more hybrid approach, something that doesn’t completely embrace the parent and surrogate key approach unless absolutely necessary.