Apparently Apache Hive is still a thing…. I should probably learn it.

So what’s up with Apache Hive? It’s been around a long time…but all the sudden it seems like it’s requirement in every other job posting these days. “It’s not you… it’s me.” That’s what I would tell Hive if it suddenly materialized as Mr. Smith via the Matrix that I’m pretty sure is the new reality these days. I’ve been around Hadoop and Spark for awhile now and I feel like Hive is that weird 2nd cousin who shows up at Thanksgiving. You know you should like and be nice to him, but you’re not sure why. It seems like Hive sits in a strange world. It’s not a RDBMS, although it does ACID, but it’s touted as a Data Warehousing tool. Time to dig in.

Apache Hive – Just another Data Warehouse solution?

“…data warehouse software facilitates reading, writing, and managing large datasets residing in distributed storage using SQL.”

Ok, so I get it a kinda. I’ve been around Data Warehousing enough to realize the importance of HDFS/Hadoop….although S3 and GS have changed the storage game forever. It’s just too easy to use, although Hive does support S3 as a storage backend. The typical RDBMS is great for storing data that has been run through ETL, I’ve seen MySQL/Postgres handle hundreds of millions of records without too much problem. But, the problems always come. It’s been my experience that when tables in these RDBMS’s start to wander over the 500 million record mark… much thought has to be put in place. Indexes, 3rd normal form..these all become extremely important. Starting to use read replicas, high-availability.

The point is yes you can get fancy and maybe even pay a lot of money to run some wonderful SQL Servers for billions of records…but it depends on the use case. It seems this is where Hive comes in. Petabyte scale. If you need analytics run at a massive scale, with the ease of SQL/HQL, using open source source software… Hive is that tool.

Although from working with Athena and BigQuery it does seem like the whole write SQL to analyze file thing is the main thrust there.

The benefits of Apache Hive as a Warehousing/Analytics tool.

- the storage of data in a filesystem with popular formats, without the typical ETL ingest and re-storage.

- analytics on a scale not possible with the traditional RDBMS.

- newer versions support ACID.

- You can use SQL to analyze this data.

- Hadoop backend (tried and true reliable and tolerant.)

Installing Apache Hive with HDFS/Hadoop backend.

First, I figured out which version of hadoop I have installed on my cluster.

>> hadoop version

>> Hadoop 2.7.3Next I’m going to curl down the version of Hive that works with my hdfs

curl ftp://apache.cs.utah.edu/apache.org/hive/hive-2.3.7/apache-hive-2.3.7-bin.tar.gz > apache-hive-2.7.3-bin.tar.gz

tar -xzvf apache-hive-2.7.3-bin.tar.gzNeed to add Hive to path.

vim ~/.bashrc

# add below lines

export PATH=/home/beach//apache-hive-2.3.7-bin:$PATH

export HIVE_HOME=/home/beach/apache-hive-2.3.7-bin

source ~/.bashrcThe next set of Hadoop hdfs commands need to be run to setup the Hive “metastore.”

hdfs dfs -mkdir /tmp

hdfs dfs -mkdir -p /user/$HIVE_USER

hdfs dfs -mkdir /user/hive/

hdfs dfs -mkdir /user/hive/warehouse

hdfs dfs -chmod g+w /tmp

hdfs dfs -chmod g+w /user/hive/warehouseIf you set HIVE_HOME above, we can now access the Hive CLI by running $HIVE_HOME/bin/hive at the bash.

Apparently we have to run a schema tool command, whatever that is.

$HIVE_HOME/bin/schematool -dbType derby -initSchema

// output

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/beach/apache-hive-2.3.7-bin/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/beach/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:derby:;databaseName=metastore_db;create=true

Metastore Connection Driver : org.apache.derby.jdbc.EmbeddedDriver

Metastore connection User: APP

Starting metastore schema initialization to 2.3.0

Initialization script hive-schema-2.3.0.derby.sql

Initialization script completed

schemaTool completedUnderstanding HiveServer2 and Beeline

So following most install instructions tell you to setup HiveServer2 and Beeline, but don’t tell you what they are. HiveServer2…..

“… a server interface that enables remote clients to execute queries against Hive and retrieve the results..”

So that makes sense I guess, connect via some client via JDBC and run Hive queries and pull results. Beeline is the CLI used to connect to and use HiveServer2. The next part i found very confusing and not obvious from the documentation. So with the HiveServer2 running in the background… connecting via Beeline to Hive you use a JBDC url. That url changes depending on if you are running your Beeline prompt on the same machine as HiveServer2 is running.

- running Beeline and HibeServer2 on same machine? – connect to

jdbc:hive2:// - running Beeline from another machine? – connect via

jbdc:hive2://localhost:10000<.— of course changing ip address and port if configured differently.

$HIVE_HOME/bin/beeline

beeline> !connect jdbc:hive2://

Connecting to jdbc:hive2://

Enter username for jdbc:hive2://:

Enter password for jdbc:hive2://:

20/10/15 01:23:52 [main]: WARN session.SessionState: METASTORE_FILTER_HOOK will be ignored, since hive.security.authorization.manager is set to instance of HiveAuthorizerFactory.

Connected to: Apache Hive (version 2.3.7)

Driver: Hive JDBC (version 2.3.7)

Transaction isolation: TRANSACTION_REPEATABLE_READ

0: jdbc:hive2://>Loading data into Hive.

So this apparently is pretty easy and pretty much what I thought it would be. The DDL statements for creating Hive tables will be familiar to anyone who’s been around SQL for more than 2 weeks. The big difference here is thinking about/knowing what file format on the backend you want to use. Since the files will be/are stored in HDFS they can be…

- parquet

- orc

- avro

- textfile (csv, json, etc) (each newline is a new record)

- blah, blah

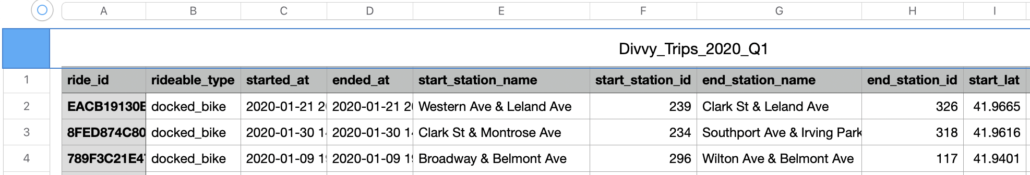

You should be familiar with some of the big data file formats, the pros and cons of each filetype and select the appropriate one. For my examples I’m just going to use the free Divy bike trips datasets, which are csvs. Like I said, the DDL create statements are straightforward and default to text file.

Partitions are an important topic in Hive, they dictate how the data is stored and help with queries in the WHERE clause from what I can tell. I’m going to treat them similar to how I would indexing a table in a RDBMS without going overboard, until I learn my lesson probably. In my case with this data, I will probably skip the partition for now.

Note to self, in the CREATE table DDL statement you don’t repeat a column name in the definition and Partition statement… its not like RDBMS index creation, a column called out as a PARTITIONED BY will be added as a column.

Time to load some of the trip data I have stored in HDFS already into this table.

hdfs dfs -ls /tripdata

Found 4 items

-rw-r--r-- 2 beach supergroup 50528553 2020-09-23 14:58 /tripdata/Divvy_Trips_2019_Q1.csv

-rw-r--r-- 2 beach supergroup 152598335 2020-09-23 14:56 /tripdata/Divvy_Trips_2019_Q2.csv

-rw-r--r-- 2 beach supergroup 225527165 2020-09-23 14:53 /tripdata/Divvy_Trips_2019_Q3.csv

-rw-r--r-- 2 beach supergroup 97031682 2020-09-23 14:52 /tripdata/Divvy_Trips_2019_Q4.csvjdbc:hive2://> CREATE EXTERNAL TABLE trip_data (ride_id STRING, rideable_type STRING, started_at TIMESTAMP, ended_at TIMESTAMP, start_station_name STRING, start_station_id INT, end_station_name STRING, end_station_id INT, start_lat DECIMAL, start_lng DECIMAL, end_lat DECIMAL, end_lng DECIMAL, member_casual STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS TEXTFILE LOCATION 'hdfs://master:9000/tripdata'tblproperties ("skip.header.line.count"="1"); OK No rows affected (2.231 seconds) jdbc:hive2://>

Note, there are number of ways to load data into Hive tables. You can copy from local, from hdfs, create a table then load data into it. Kinda up to your use case.

SELECT * FROM trip_data LIMIT 10;

// ouput

+--------------------+--------------------------+------------------------+-------------------------------+-----------------------------+-----------------------------+---------------------------+----------------------+----------------------+--------------------+--------------------+--------------------------+

| trip_data.ride_id | trip_data.rideable_type | trip_data.ended_at | trip_data.start_station_name | trip_data.start_station_id | trip_data.end_station_name | trip_data.end_station_id | trip_data.start_lat | trip_data.start_lng | trip_data.end_lat | trip_data.end_lng | trip_data.member_casual |

+--------------------+--------------------------+------------------------+-------------------------------+-----------------------------+-----------------------------+---------------------------+----------------------+----------------------+--------------------+--------------------+--------------------------+

| 21742443 | 2019-01-01 00:04:37 | 2019-01-01 00:11:07.0 | 2167 | 390 | 199 | NULL | 84 | NULL | NULL | NULL | 1989 |

| 21742444 | 2019-01-01 00:08:13 | 2019-01-01 00:15:34.0 | 4386 | 441 | 44 | NULL | 624 | NULL | NULL | NULL | 1990 |

| 21742445 | 2019-01-01 00:13:23 | 2019-01-01 00:27:12.0 | 1524 | 829 | 15 | NULL | 644 | NULL | NULL | NULL | 1994 |

| 21742446 | 2019-01-01 00:13:45 | 2019-01-01 00:43:28.0 | 252 | NULL | 783.0" | 123 | NULL | 176 | NULL | NULL | Male |

| 21742447 | 2019-01-01 00:14:52 | 2019-01-01 00:20:56.0 | 1170 | 364 | 173 | NULL | 35 | NULL | NULL | NULL | 1994 |

| 21742448 | 2019-01-01 00:15:33 | 2019-01-01 00:19:09.0 | 2437 | 216 | 98 | NULL | 49 | NULL | NULL | NULL | 1983 |

| 21742449 | 2019-01-01 00:16:06 | 2019-01-01 00:19:03.0 | 2708 | 177 | 98 | NULL | 49 | NULL | NULL | NULL | 1984 |

| 21742450 | 2019-01-01 00:18:41 | 2019-01-01 00:20:21.0 | 2796 | 100 | 211 | NULL | 142 | NULL | NULL | NULL | 1990 |

| 21742451 | 2019-01-01 00:18:43 | 2019-01-01 00:47:30.0 | 6205 | NULL | 727.0" | 150 | NULL | 148 | NULL | NULL | Male |

| 21742452 | 2019-01-01 00:19:18 | 2019-01-01 00:24:54.0 | 3939 | 336 | 268 | NULL | 141 | NULL | NULL | NULL | 1996 |

+--------------------+--------------------------+------------------------+-------------------------------+-----------------------------+-----------------------------+---------------------------+----------------------+----------------------+--------------------+--------------------+--------------------------+

10 rows selected (7.244 seconds)

Musings on Hive.

Sweet! Well that was easy. I can see how Hive would be a very powerful tool in the Big Data world. Especially if you already have terrabytes/petabytes of data sitting around in HDFS. This would kinda be a no brainer. Here’s what else I learned…

- The documentation for Hive is sorta a joke. It’s just terrible compared to other big data tools.

- Installation is super easy.

- The CLI (command line interface) for playing with Hive (Beeline) is super not obvious.

- It’s hard to know how to configure Hive.

- Writing SQL on a huge pile of structured files is sweet.

- It probably only makes sense to use Hive with data on a massive scale.