Why Data Migrations Suck.

I’ve often wondered what purgatory would be like, doing penance for millennia into eternity. It would probably be doing data migrations. I suppose they are not all that dissimilar from normal software migrations, but there are a few things that make data migrations a little more horrible and soul-sucking. Data migrations are able to slow teams down to a crawl, take at least twice as long as planned, and be way more difficult than imagined.

Can’t it be made easy, shouldn’t Data Migrations have been conquered by now? I mean just put together the perfect plan, break up the work, make a bunch of tickets, estimate the work, and the rest falls into place? If only.

What is a Data Migration?

Much like any software migration, not every Data Migration is the same and there are a variety of categories that any migration might fall into.

Each has its own set of unique challenges that have driven many an engineer to drink.

- Swapping out tool(s).

- Brand new tooling architecture.

- New architecture + data model.

- Data model upgrade.

- Storage layer change.

- Some combination of the above.

What makes Data Migrations so tricky and fraught with trouble and woe? Primarily because each piece of the Data Stack interacts in a tightly coupled way with the data. It’s not that there isn’t a separation of concerns, for example easily swapping out a storage or compute layer, without major changes.

But, the reality is that a lot of data stack pieces are more tightly coupled than we want. This just happens over time, a slow evolution towards chaos and the breakdown of the separation of concerns.

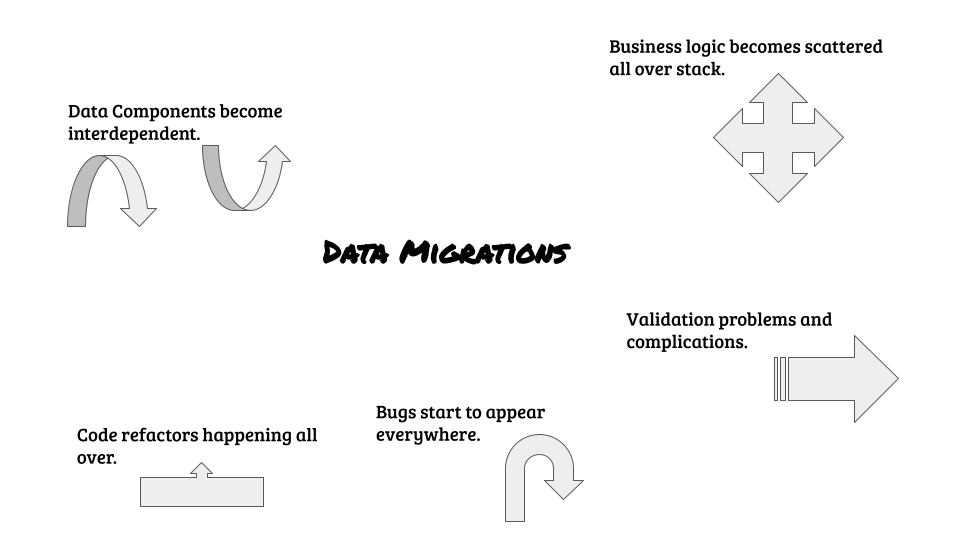

- Data components slowly become dependent on each other.

- Business logic eventually becomes embedded all over the stack.

- Seemingly simple details become a major pain.

- Typically refactors are taking place at the same time.

- How to validate the migration results gets complicated.

Data components slowly become dependent on each other.

We all have grand designs, in the beginning, our code soars upwards like our hearts, full of hope for the future. But things change, deadlines come and go, and features get added.

As business logic changes and grows so do the interdependencies and complexities of data pipelines and their components.

And when the time comes for data migrations all these known and unknown dependencies have to be unwound and examined for their impact and future existence in the new platform that is being developed.

Business logic eventually becomes embedded all over the stack.

Few things in life are more tricky and insidious than business logic that ends up in every part of your code and architecture. It could be bash scripts, it could be Python, it could be Spark, maybe it’s running in a lambda somewhere. Where ever it is, it’s important and integral to the data migration, as even missing one small piece of business logic will lead to incorrect results downstream, problems during validation, and a distrust of the data migration in general.

- Document all business logic.

- Try to keep business logic in a single place.

Don’t make the same mistake in the new data platform or system you’re migrating to, keep the business logic well documented and for the most part, found in a single place where possible.

Seemingly simple details become a major pain.

Another common “gotcha” when doing data migrations is thinking ahead of time that the “thing x” will be a pain, and “thing y” will be easy. And of course, the opposite happens. This usually comes from not understanding and digging in far enough when planning for the data migration, and missing the small details.

Typically these problems arise because the data migration involves new tooling and different architecture, schemas, and all other sorts of improvements. Integrating and migrating old thought patterns into the new system will give rise to at least a few “clashes.” What are some examples of little details?

- Maybe processing files with AWS

lambdasand realizing every so often you get a file too large to fit ondiskor inmemorywith your current code. - Sharing data sources while doing the data migration.

- Tooling versions.

Typically refactors are taking place at the same time.

A good Data Engineer never lets too much tech debt slide. But, this can be a double-edged sword, mostly because this type of refactoring can extend the time it takes to complete the migration, and produce new bugs in the process! It’s rare that any data migration involves simply picking up code and dropping it in a new spot. Mostly because data schemas change, tooling versions and different tooling, etc.

- Bugs are found when migrating “old”/”current” code.

- Refactoring and updating code takes time and longer than most think.

- New bugs are introduced when refactoring.

How to validate the migration results gets complicated.

Ugg, and probably the worst one of them all. The one everyone ignores till the bitter end, thinking it’s no big deal, yet this one has the potential of taking more time than the actual migration itself. Of course during a data migration most likely bugs were found in the old code, causing new results to be “more accurate”, although this can be a hard pill to swallow for those who’ve been using that old data for years.

As well, schemas change, history has to be loaded up, and all this has to be reconciled in a consumable way, many times with Dashboards or other graphs that take a fair amount of work in themselves to complete, requiring their own data pipelines just to keep fresh. Think of that, it’s like its own mini-project within the data migration!

Musings

Data Migrations suck. They always will.